The importance of prompt engineering in preventing AI hallucinations

With the available platforms, you no longer need to start from scratch when creating a prompt. Sample prompts are already available in the Microsoft Adoption Sample Solutions Gallery. However, it is very important to know how to write a good prompt; starting with something that already takes you halfway can be helpful.

This document discusses the importance of prompt engineering to prevent artificial intelligence systems from generating incorrect or fanciful information, also known as hallucinations. The text also highlights that well-designed prompts can improve the quality of AI responses, making them more accurate and relevant, reducing the likelihood of hallucinations.

In this approach, I emphasize the potential impact of hallucinations on business applications, including poor decisions, lost revenue, and reputational damage. I highlight the need for human validation and robust, prompt engineering practices to ensure the trustworthiness of AI systems in environmental critics.

Prompt engineering

In generative AI systems, such as natural language models (NLP), the instructions provided to the model to elicit a response (prompts) are of utmost importance. The production of incorrect or fanciful information unrelated to reality is one of the biggest challenges faced by the technical community that develops and applies these models in critical scenarios.

Prompt engineering significantly mitigates these errors in an enterprise application context, especially when these models are integrated into enterprise processes that demand high accuracy, such as CRM, ERP, and advanced data analytics systems.

Advantages of prompt engineering

- A well-crafted prompt can generate more accurate and relevant responses from an AI-based language model.

- Structuring prompts effectively allows you to get the desired answers faster without constant reformulations.

- Prompt engineering allows customizations of the interaction with the AI model to meet the specific needs of a target audience or task.

The elements of a good prompt

Prompts function as instructions for the AI model (GPT) and generally consist of two main parts: the instruction and the data context. Focusing on key elements when creating custom AI capabilities is essential to getting the best answers.

According to the Microsoft AI Builder team’s prompt engineering guide, a set of elements should be part of your prompt. They are:

A practical example of a well-crafted prompt:

- Task: an instruction telling the Generative Pre-trained Transformer (GPT) model which task to perform.

- Context: Describe the data that is triggered, along with any input variables.

- Expectations: convey goals and expectations about the response to GPT.

- Output: Help GPT format the output the way you want.

Inappropriate prompt: “How can I become a Solution Architect?”

Improved prompt: “Design a professional development plan to build a Solution Architect career in 6 years. The plan should include goals and objectives, resources and tools, and a timeline for activities. Format the plan to be concise and practical, and present the information in a clear, easy-to-follow manner.”

Good instructions can prevent AI hallucinations

A hallucination occurs when a Large-Scale Language Model (LLM) generates information not based on real-world facts or evidence. This may include fictitious events, incorrect data, or irrelevant results.

Designing effective prompts requires a deep understanding of how the model processes information and its limitations in terms of training data and heuristics. AI hallucinations can be exacerbated by vague, overly complex, or open-ended questions or commands, where the model can “improvise” responses that deviate from reality.

AI models are trained in large volumes of data and learn to make predictions by identifying patterns in that data. However, the accuracy of these predictions directly depends on the quality and completeness of the training data. If the training data is incomplete, biased, or flawed, the AI model may learn incorrect patterns, resulting in inaccurate predictions or hallucinations.

For example, an AI model trained on a medical image dataset can learn to identify cancer cells. However, if the dataset does not include images of healthy tissue, the AI model may incorrectly predict that healthy tissue is cancerous.

What causes AI hallucinations?

- Lack of clear context. When the language model lacks context, it can manufacture responses.

- Ambiguous requests. Vague questions can lead to random or inaccurate answers.

- Long generation duration. The longer the response is generated, the greater the chance of hallucinations.

- No enhanced recovery process. LLMs without access to external sources – such as databases or search engines – can produce errors when they need to generate specific information.

How to prevent AI from generating hallucinations?

Creating good prompts can help AI generate more valid and accurate content. This process is called immediate engineering. Here are some techniques for creating a good prompt with real-life examples:

- Train your AI using only relevant and specific data relevant to the task that the model will perform.

- Limit the possible outcomes that the model can predict, for example, using techniques such as “regularization,” which penalizes the model for making extreme predictions.

- Create a model for your AI to follow, which can help guide the model when making predictions. The model should include elements such as a title, an introduction, a body, and a conclusion.

- Set clear expectations, guiding the model towards specific information and reducing the likelihood of hallucinations. Use specific language that guides the model, focus on known data sources or actual events, and ask for summaries or paraphrases of established sources.

- Break down complex prompts, composing requests into smaller, more specific parts to keep the language model focused on a narrower scope.

- Request verification by asking LLM to cite the source of its statements. This leads the model to produce more informed and reliable answers.

- Use the chain of thought (CoT) by guiding the model through logical steps; you can control the path of reasoning and help the model reach accurate conclusions.

The impact of hallucinations on business applications

When AI generates incorrect or misleading information, it can result in poor decisions, lost revenue, reputational damage, and even legal issues, especially in industries that rely on accurate data for decision-making, such as finance, healthcare, and legal.

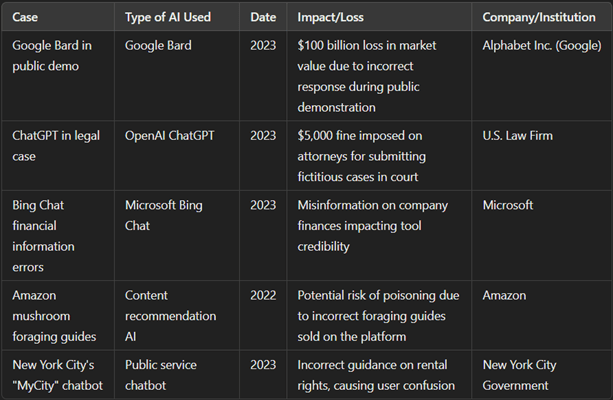

Several benchmarks highlight real-life incidents where AI hallucinations led to tangible losses, including customer support systems that offered incorrect advice and credit analysis tools that made incorrect financial predictions.

These cases highlight the importance of rigorous controls and human validation to mitigate the risks of hallucination in business applications, especially when losses can add up to significant amounts. The table below highlights how AI hallucinations directly impact efficiency and confidence in business solutions.

Conclusion

Prompt engineering is essential for preventing hallucinations in AI systems, ensuring more accurate and controlled data production suitable for sensitive business contexts. By strategically using prompt engineering techniques, solution architects and AI experts can reduce the room for error, creating AI models that add real value to the enterprise environment without compromising the integrity of critical data and processes. In this way, prompt engineering increases the reliability of AI applications, positioning it as a fundamental element in the safe and efficient digital transformation of business.

References

- When AI gets wrong: Addressing AI hallucinations and bias

- Advanced prompt engineering for reducing hallucination

- Microsoft Learn Challenge

- Benchmark ou Artigos:

- PYMNTS. “Businesses Confront AI Hallucination and Reliability Issues” (2024)

- Madison Marcus. “AI Hallucinations & Legal Pitfalls” (2023)

- Lettria. “Top 5 examples of AI hallucinations and why they matter” (2023)

- UC Berkeley Sutardja Center (scet.berkeley.edu)

- The Guardian (theguardian.com)

- Barracuda Blog (blog.barracuda.com)

- The Verge (theverge.com)

- Ars Technica (arstechnica.com)

- Microsoft AI Solutions in Business Processes: Outlook 2025